Ana1.2 分析網頁:使用 BeautifulSoup 模組

在使用 requests 模組下載一個網頁之後,所需要處理的網頁程式碼內容都會包含仵多不同的HTML標籤及屬性,若所下載的是更複雜的網頁,則就會需要花費更多的力氣在程式碼中找尋所以要的特定資料。所以,使用 BeautifulSoup 模組來分析網頁的程式碼內容,可以比較容易找尋到所需要的特定資料。

安裝 BeautifulSoup 模組

pip install beautifulsoup4

建立 BeautifulSoup 物件

使用 bs.BeautifulSoup() 建立一個 BeautifulSoup 物件。

>>> import requests, bs4

>>> r = requests.get('https://xkcd.com/')

>>>

>>> r.status_code

200

>>> soup = bs4.BeautifulSoup(r.text)

>>>

>>> type(soup)

<class 'bs4.BeautifulSoup'>

使用 find_all() 來尋找標籤元素

使用 find_all() 及 name 參數來尋找網頁程式碼中所有符合的資料內容,例如:name='a' 表示標籤 <a>。

>>> import requests, bs4

>>> r = requests.get('https://xkcd.com/')

>>> soup = bs4.BeautifulSoup(r.text)

>>> a_list = soup.find_all(name='a') # 取得所有標籤為<a>的內容且存入串列中

>>> len(a_list)

42

>>> for tag in a_list: # 顯示所有標籤<a>的內容

... print(tag)

...

<a href="/archive">Archive</a>

<a href="http://what-if.xkcd.com">What If?</a>

<a href="http://blag.xkcd.com">Blag</a>

<a href="http://store.xkcd.com/">Store</a>

<a href="/about" rel="author">About</a>

... 略 ...

>>> for tag in a_list: # 顯示所有標籤為<a>且'href'的內容

... print(tag.get('href'))

...

/archive

http://what-if.xkcd.com

http://blag.xkcd.com

http://store.xkcd.com/

/about

/

https://live.newscientist.com/speakers/randall-munroe

http://www.rigb.org/whats-on/events-2017/october/public-lunchtime-talk--randall-munroe

http://www.intelligencesquared.com/events/randall-munroe-on-making-complicated-stuff-simple/

https://www.stpetersyork.org.uk/public_lectures/randall_munroe_thing_explainer

https://www.eventbrite.co.uk/e/thing-explaining-with-randall-munroe-tickets-37832289396

https://www.toppingbooks.co.uk/events/ely/randall-murray-thing-explainer/

https://www.eventbrite.co.uk/e/randall-munroe-book-signing-thing-explainer-tickets-38041072873

http://www.cheltenhamfestivals.com/literature/whats-on/2017/randall-munroe/

/1/

/1894/

... 略 ...

>>> for tag in a_list: # 取得所有標籤為<a>且連結的內容

... if 'http' in a.get('href'): # 判斷連結內容是否為'http'網址

... print(a.get('href'))

...

http://what-if.xkcd.com

http://blag.xkcd.com

http://store.xkcd.com/

https://live.newscientist.com/speakers/randall-munroe

http://www.rigb.org/whats-on/events-2017/october/public-lunchtime-talk--randall-munroe

http://www.intelligencesquared.com/events/randall-munroe-on-making-complicated-stuff-simple/

https://www.stpetersyork.org.uk/public_lectures/randall_munroe_thing_explainer

https://www.eventbrite.co.uk/e/thing-explaining-with-randall-munroe-tickets-37832289396

https://www.toppingbooks.co.uk/events/ely/randall-murray-thing-explainer/

https://www.eventbrite.co.uk/e/randall-munroe-book-signing-thing-explainer-tickets-38041072873

http://www.cheltenhamfestivals.com/literature/whats-on/2017/randall-munroe/

http://threewordphrase.com/

http://www.smbc-comics.com/

http://www.qwantz.com

http://oglaf.com/

http://www.asofterworld.com

http://buttersafe.com/

http://pbfcomics.com/

http://questionablecontent.net/

http://www.buttercupfestival.com/

http://www.mspaintadventures.com/?s=6&p=001901

http://www.jspowerhour.com/

http://womenalsoknowstuff.com/

https://techsolidarity.org/

https://medium.com/civic-tech-thoughts-from-joshdata/so-you-want-to-reform-democracy-7f3b1ef10597

http://creativecommons.org/licenses/by-nc/2.5/

使用 find_all() 及name, attrs 參數來找出所有符合的資料內容,例如:<ul> 標籤及 'comicNav' 屬性。

>>> nav_list = soup.find_all(name='ul', attrs='comicNav')

>>> nav_list

[<ul class="comicNav">

<li><a href="/1/">|<</a></li>

<li><a accesskey="p" href="/1894/" rel="prev">< Prev</a></li>

<li><a href="//c.xkcd.com/random/comic/">Random</a></li>

<li><a accesskey="n" href="#" rel="next">Next ></a></li>

<li><a href="/">>|</a></li>

</ul>,

<ul class="comicNav">

<li><a href="/1/">|<</a></li>

<li><a accesskey="p" href="/1894/" rel="prev">< Prev</a></li>

<li><a href="//c.xkcd.com/random/comic/">Random</a></li>

<li><a accesskey="n" href="#" rel="next">Next ></a></li>

<li><a href="/">>|</a></li>

</ul>]

>>> nav_list = list(set(nav_list)) # 使用set去除重複的部份

>>> for tag in nav_list:

... print(tag)

...

<ul class="comicNav">

<li><a href="/1/">|<</a></li>

<li><a accesskey="p" href="/1894/" rel="prev">< Prev</a></li>

<li><a href="//c.xkcd.com/random/comic/">Random</a></li>

<li><a accesskey="n" href="#" rel="next">Next ></a></li>

<li><a href="/">>|</a></li>

</ul>

使用 find_all() 及name, attrs 與屬性字典檔來找出所有符合的資料內容,例如:字典檔 {'href': True} 表示 'href' 屬性有值。

>>> a_list = soup.find_all(name='a', attrs={'href': True}) # 'href': True 表示該屬性有值

>>> for a in a_list:

... print(a)

...

<a href="/archive">Archive</a>

<a href="http://what-if.xkcd.com">What If?</a>

<a href="http://blag.xkcd.com">Blag</a>

<a href="http://store.xkcd.com/">Store</a>

<a href="/about" rel="author">About</a>

<a href="/"><img alt="xkcd.com logo" height="83" src="/s/0b7742.png" width="185"/></a>

... 略 ...

>>> a_dict = {'href': True, 'accesskey': True}

>>> a_list = soup.find_all(name='a', attrs=a_dict ) # 表示屬性 'href' 及 'accesskey' 有值

>>> for a in a_list:

... print(a)

...

<a accesskey="p" href="/1894/" rel="prev">< Prev</a>

<a accesskey="n" href="#" rel="next">Next ></a>

<a accesskey="p" href="/1894/" rel="prev">< Prev</a>

<a accesskey="n" href="#" rel="next">Next ></a>

使用 find_all() 及 name, string 參數來找出所有符合的資料內容,例如:name='a' 且 string=''About"表示<a ... >About</a>。

>>> str_list = soup.find_all(name='a', string='About') # 表示標籤內的字串為'About'

>>> for s in str_list:

... print(s)

...

<a href="/about" rel="author">About</a>

>>> str_list = soup.find_all(name='a', string=True)

>>> for s in str_list:

... if 'A' in s.string:

... print(s)

...

<a href="/archive">Archive</a>

<a href="/about" rel="author">About</a>

<a href="/atom.xml">Atom Feed</a>

<a href="http://www.asofterworld.com">A Softer World</a>

<a href="http://womenalsoknowstuff.com/">Women Also Know Stuff</a>

<a href="http://creativecommons.org/licenses/by-nc/2.5/">Creative Commons Attribution-NonCommercial 2.5 License</a>

使用 select() 來尋找標籤元素

使用 select() 及輸入字串 'tag' 來尋找網頁程式碼中所有符合輸入的資料內容,例如:'link' 表示<link></link>。

>>> a_list = soup.select('link')

>>> for a in a_list:

... print(a)

...

<link href="/s/b0dcca.css" rel="stylesheet" title="Default" type="text/css"/>

<link href="/s/919f27.ico" rel="shortcut icon" type="image/x-icon"/>

<link href="/s/919f27.ico" rel="icon" type="image/x-icon"/>

<link href="/atom.xml" rel="alternate" title="Atom 1.0" type="application/atom+xml"/>

<link href="/rss.xml" rel="alternate" title="RSS 2.0" type="application/rss+xml"/>

使用 select() 及輸入字串 'tag tag' 來尋找網頁程式碼中所有符合的資料內容,例如:'head link' 表示 <head><link> ... </link></head>。

>>> a_list = soup.select('head link')

>>> for a in a_list:

... print(a)

...

<link href="/s/b0dcca.css" rel="stylesheet" title="Default" type="text/css"/>

<link href="/s/919f27.ico" rel="shortcut icon" type="image/x-icon"/>

<link href="/s/919f27.ico" rel="icon" type="image/x-icon"/>

<link href="/atom.xml" rel="alternate" title="Atom 1.0" type="application/atom+xml"/>

<link href="/rss.xml" rel="alternate" title="RSS 2.0" type="application/rss+xml"/>

>>> a_list[0]

<link href="/s/b0dcca.css" rel="stylesheet" title="Default" type="text/css"/>

>>> a_list[0].parent # 取得 <link> 標籤的上層父標籤

<head>

<script>

(function(i,s,o,g,r,a,m){i['GoogleAnalyticsObject']=r;i[r]=i[r]||function(){

(i[r].q=i[r].q||[]).push(arguments)},i[r].l=1*new Date();a=s.createElement(o),

m=s.getElementsByTagName(o)[0];a.async=1;a.src=g;m.parentNode.insertBefore(a,m)

})(window,document,'script','https://www.google-analytics.com/analytics.js','ga');

ga('create', 'UA-25700708-7', 'auto');

ga('send', 'pageview');

</script>

<link href="/s/b0dcca.css" rel="stylesheet" title="Default" type="text/css"/>

<title>xkcd: Worrying Scientist Interviews</title>

<meta content="IE=edge" http-equiv="X-UA-Compatible"/>

<link href="/s/919f27.ico" rel="shortcut icon" type="image/x-icon"/>

<link href="/s/919f27.ico" rel="icon" type="image/x-icon"/>

<link href="/atom.xml" rel="alternate" title="Atom 1.0" type="application/atom+xml"/>

<link href="/rss.xml" rel="alternate" title="RSS 2.0" type="application/rss+xml"/>

<script async="" src="/s/b66ed7.js" type="text/javascript"></script>

<script async="" src="/s/1b9456.js" type="text/javascript"></script>

</head>

使用 select() 及輸入字串 'tag > tag' 來尋找網頁程式碼中所有符合輸入的資料內容,例如:'div > span' 表示<div><span> ... </span></div> 中間沒有其他的標籤。

>>> a_list = soup.select('div > span')

>>> for a in a_list:

... print(a)

...

<span><a href="/"><img alt="xkcd.com logo" height="83" src="/s/0b7742.png" width="185"/></a></span>

<span id="slogan">A webcomic of romance,<br/> sarcasm, math, and language.</span>

>>> a_list[1]

<span id="slogan">A webcomic of romance,<br/> sarcasm, math, and language.</span>

>>> a_list[1].parent

<div id="masthead">

<span><a href="/"><img alt="xkcd.com logo" height="83" src="/s/0b7742.png" width="185"/></a></span>

<span id="slogan">A webcomic of romance,<br/> sarcasm, math, and language.</span>

</div>

使用 select() 及輸入字串 'tag[attrs=value]' 來尋找網頁程式碼中所有符合輸入的資料內容,例如:'div[id=comic]' 表示<div id='comic>的標籤。

>>> a_list = soup.select('div[id=comic]')

>>> for a in a_list:

... print(a)

...

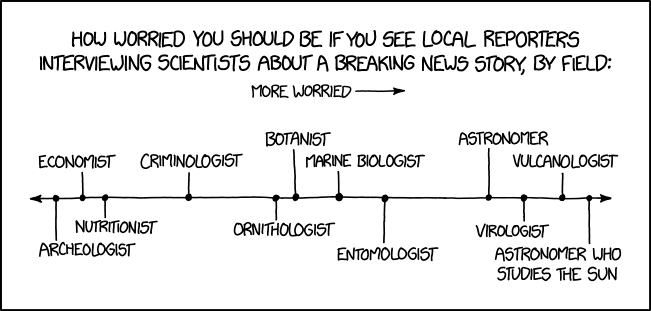

<div id="comic">

<img alt="Worrying Scientist Interviews" src="//imgs.xkcd.com/comics/worrying_scientist_in

terviews.png" srcset="//imgs.xkcd.com/comics/worrying_scientist_interviews_2x.png 2x" titl

e="They always try to explain that they're called 'solar physicists', but the reporters in

terrupt with "NEVER MIND THAT, TELL US WHAT'S WRONG WITH THE SUN!""/>

</div>

>>> a_list[0].find('img')

<img alt="Worrying Scientist Interviews" src="//imgs.xkcd.com/comics/worrying_scientist_in

terviews.png" srcset="//imgs.xkcd.com/comics/worrying_scientist_interviews_2x.png 2x" titl

e="They always try to explain that they're called 'solar physicists', but the reporters in

terrupt with "NEVER MIND THAT, TELL US WHAT'S WRONG WITH THE SUN!""/>

>>> a_src = a_list[0].find('img')['src']

>>> a_src

'//imgs.xkcd.com/comics/worrying_scientist_interviews.png'

>>> import os.path

>>> os.path.basename(a_src)

'worrying_scientist_interviews.png'

>>> os.path.dirname(a_src)

'//imgs.xkcd.com/comics/'

>>> a_link = 'http:' + a_src

>>> a_link

'http://imgs.xkcd.com/comics/worrying_scientist_interviews.png'

>>> r = requests.get(a_link)

>>> r.status_code

200

>>> with open(os.path.basename(a_link), 'wb')as f:

... for chunk in r.iter_content(1024):

... return_value = f.write(chunk)

...

>>>

參考資料

- Beautiful Soup, ♥️

- How to download image using requests

- How to download image using requests

- xkcd.com, ♥️

- Python自動化的樂趣, 第十一章, Al Sweigart 著、H&C 譯, 碁峰